Topic 2 - Question Set 2

Question:76

You are developing an Azure Durable Function to manage an online ordering process.

The process must call an external API to gather product discount information.

You need to implement the Azure Durable Function.

Which Azure Durable Function types should you use? Each correct answer presents part of the solution.

NOTE: Each correct selection is worth one point.

- A. Orchestrator

- B. Entity

- C. Client

- D. Activity

These HTTP APIs are extensibility webhooks that are authorized by the Azure Functions host but handled directly by the Durable Functions extension.

Reference:

https://docs.microsoft.com/en-us/azure/azure-functions/durable/durable-functions-http-api

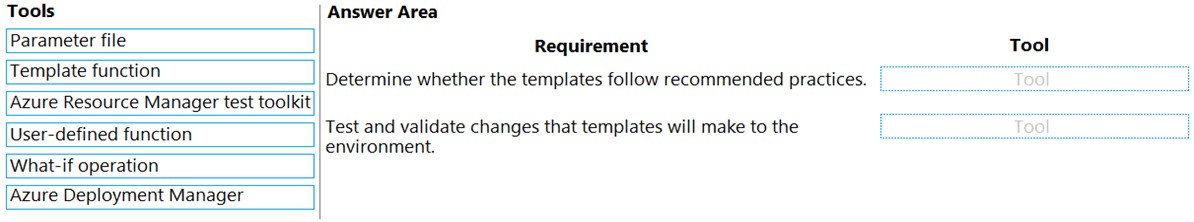

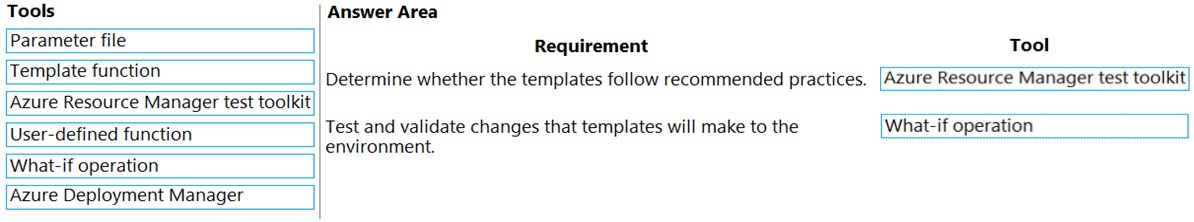

Question:77

You are authoring a set of nested Azure Resource Manager templates to deploy multiple Azure resources.

The templates must be tested before deployment and must follow recommended practices.

You need to validate and test the templates before deployment.

Which tools should you use? To answer, drag the appropriate tools to the correct requirements. Each tool may be used once, more than once, or not at all. You may need to drag the split bar between panes or scroll to view content.

NOTE: Each correct selection is worth one point.

Select and Place:

Box 1: Azure Resource Manager test toolkit

Use ARM template test toolkit -

The Azure Resource Manager template (ARM template) test toolkit checks whether your template uses recommended practices. When your template isn't compliant with recommended practices, it returns a list of warnings with the suggested changes. By using the test toolkit, you can learn how to avoid common problems in template development.

Box 2: What-if operation -

ARM template deployment what-if operation

Before deploying an Azure Resource Manager template (ARM template), you can preview the changes that will happen. Azure Resource Manager provides the what-if operation to let you see how resources will change if you deploy the template. The what-if operation doesn't make any changes to existing resources.

Instead, it predicts the changes if the specified template is deployed.

Reference:

https://docs.microsoft.com/en-us/azure/azure-resource-manager/templates/test-toolkit https://docs.microsoft.com/en-us/azure/azure-resource-manager/templates/deploy-what-if

Question Set:3

Question:78

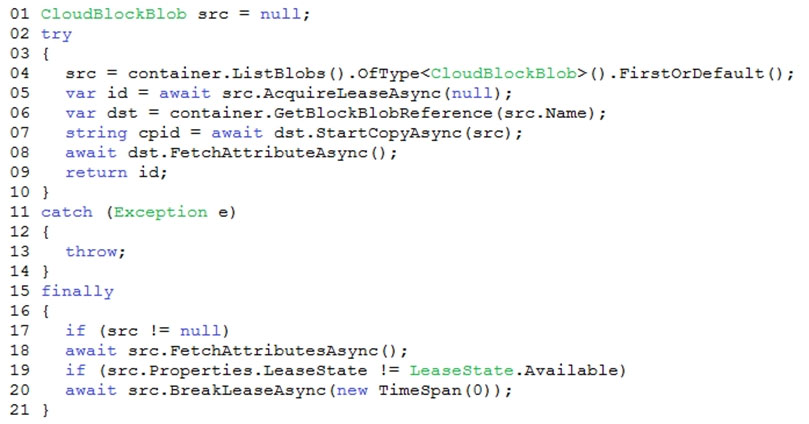

You are developing a solution that uses the Azure Storage Client library for .NET. You have the following code: (Line numbers are included for reference only.)

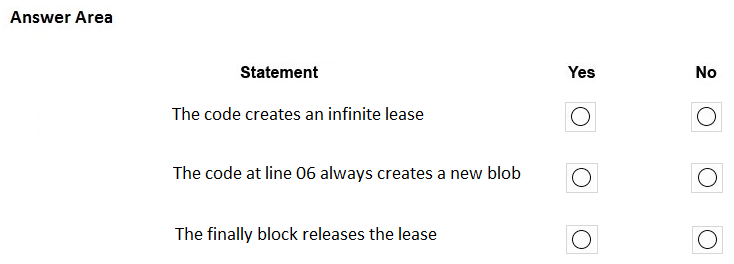

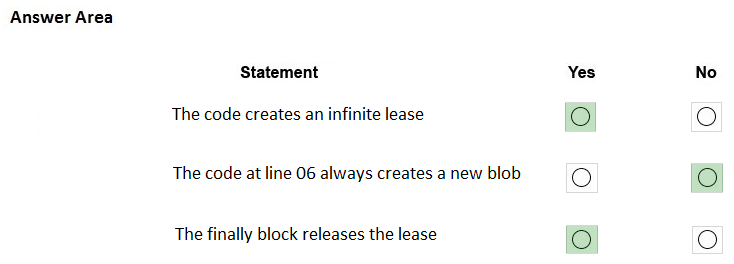

For each of the following statements, select Yes if the statement is true. Otherwise, select No.

NOTE: Each correct selection is worth one point.

Hot Area:

Box 1: Yes -

AcquireLeaseAsync does not specify leaseTime.

leaseTime is a TimeSpan representing the span of time for which to acquire the lease, which will be rounded down to seconds. If null, an infinite lease will be acquired. If not null, this must be 15 to 60 seconds.

Box 2: No -

The GetBlockBlobReference method just gets a reference to a block blob in this container.

Box 3: Yes -

The BreakLeaseAsync method initiates an asynchronous operation that breaks the current lease on this container.

Reference:

https://docs.microsoft.com/en-us/dotnet/api/microsoft.azure.storage.blob.cloudblobcontainer.acquireleaseasync https://docs.microsoft.com/en-us/dotnet/api/microsoft.azure.storage.blob.cloudblobcontainer.getblockblobreference https://docs.microsoft.com/en-us/dotnet/api/microsoft.azure.storage.blob.cloudblobcontainer.breakleaseasync

Question:79

You are building a website that uses Azure Blob storage for data storage. You configure Azure Blob storage lifecycle to move all blobs to the archive tier after 30 days.

Customers have requested a service-level agreement (SLA) for viewing data older than 30 days.

You need to document the minimum SLA for data recovery.

Which SLA should you use?

- A. at least two days

- B. between one and 15 hours

- C. at least one day

- D. between zero and 60 minutes

Reference:

https://docs.microsoft.com/en-us/azure/storage/blobs/storage-blob-storage-tiers?tabs=azure-portal

Question:80

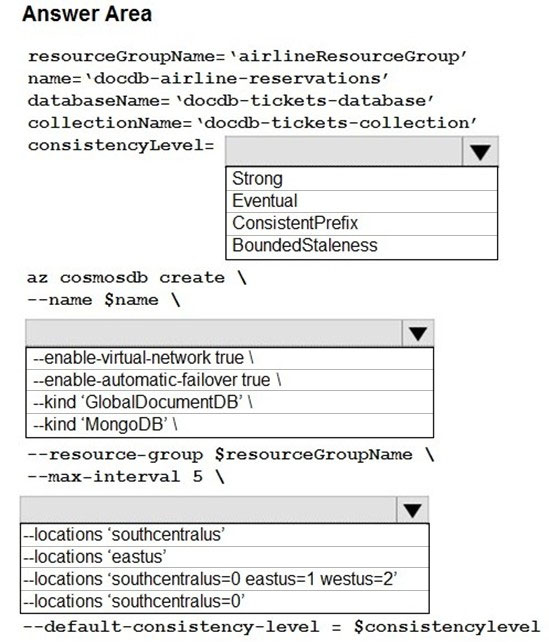

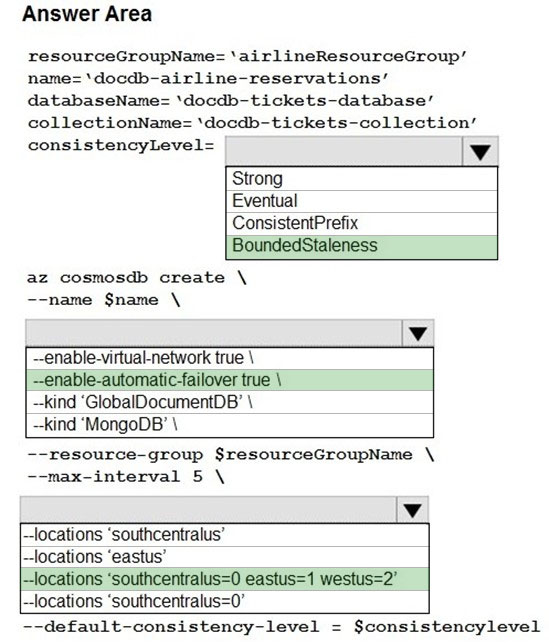

You are developing a ticket reservation system for an airline.

The storage solution for the application must meet the following requirements:

✑ Ensure at least 99.99% availability and provide low latency.

✑ Accept reservations even when localized network outages or other unforeseen failures occur.

✑ Process reservations in the exact sequence as reservations are submitted to minimize overbooking or selling the same seat to multiple travelers.

✑ Allow simultaneous and out-of-order reservations with a maximum five-second tolerance window.

You provision a resource group named airlineResourceGroup in the Azure South-Central US region.

You need to provision a SQL API Cosmos DB account to support the app.

How should you complete the Azure CLI commands? To answer, select the appropriate options in the answer area.

NOTE: Each correct selection is worth one point.

Hot Area:

Box 1: BoundedStaleness -

Bounded staleness: The reads are guaranteed to honor the consistent-prefix guarantee. The reads might lag behind writes by at most "K" versions (that is,

"updates") of an item or by "T" time interval. In other words, when you choose bounded staleness, the "staleness" can be configured in two ways:

The number of versions (K) of the item

The time interval (T) by which the reads might lag behind the writes

Incorrect Answers:

Strong -

Strong consistency offers a linearizability guarantee. Linearizability refers to serving requests concurrently. The reads are guaranteed to return the most recent committed version of an item. A client never sees an uncommitted or partial write. Users are always guaranteed to read the latest committed write.

Box 2: --enable-automatic-failover true\

For multi-region Cosmos accounts that are configured with a single-write region, enable automatic-failover by using Azure CLI or Azure portal. After you enable automatic failover, whenever there is a regional disaster, Cosmos DB will automatically failover your account.

Question: Accept reservations event when localized network outages or other unforeseen failures occur.

Box 3: --locations'southcentralus=0 eastus=1 westus=2

Need multi-region.

Reference:

https://docs.microsoft.com/en-us/azure/cosmos-db/consistency-levels https://github.com/MicrosoftDocs/azure-docs/blob/master/articles/cosmos-db/manage-with-cli.md

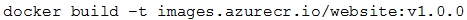

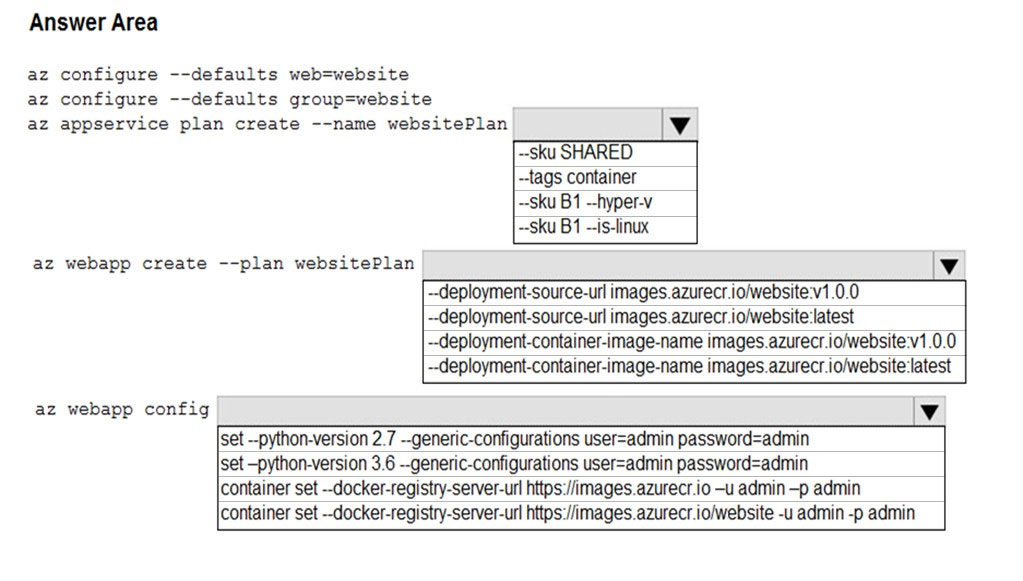

Question:81

You are preparing to deploy a Python website to an Azure Web App using a container. The solution will use multiple containers in the same container group. The

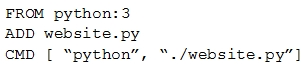

Dockerfile that builds the container is as follows:

You build a container by using the following command. The Azure Container Registry instance named images is a private registry.

The user name and password for the registry is admin.

The Web App must always run the same version of the website regardless of future builds.

You need to create an Azure Web App to run the website.

How should you complete the commands? To answer, select the appropriate options in the answer area.

NOTE: Each correct selection is worth one point.

Hot Area:

Box 1: --SKU B1 --hyper-v -

--hyper-v

Host web app on Windows container.

Box 2: --deployment-source-url images.azurecr.io/website:v1.0.0

--deployment-source-url -u

Git repository URL to link with manual integration.

The Web App must always run the same version of the website regardless of future builds.

Incorrect:

--deployment-container-image-name -i

Linux only. Container image name from Docker Hub, e.g. publisher/image-name:tag.

Box 3: az webapp config container set -url https://images.azurecr.io -u admin -p admin az webapp config container set

Set a web app container's settings.

Paremeter: --docker-registry-server-url -r

The container registry server url.

The Azure Container Registry instance named images is a private registry.

Example:

az webapp config container set --docker-registry-server-url https://{azure-container-registry-name}.azurecr.io

Reference:

https://docs.microsoft.com/en-us/cli/azure/appservice/plan

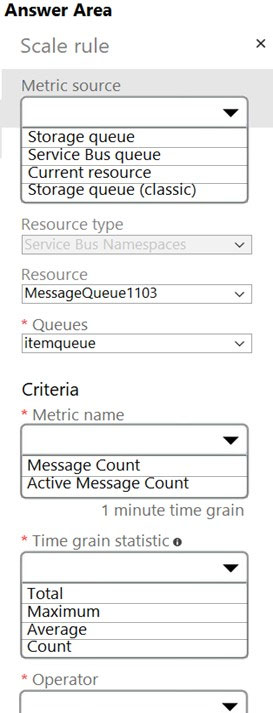

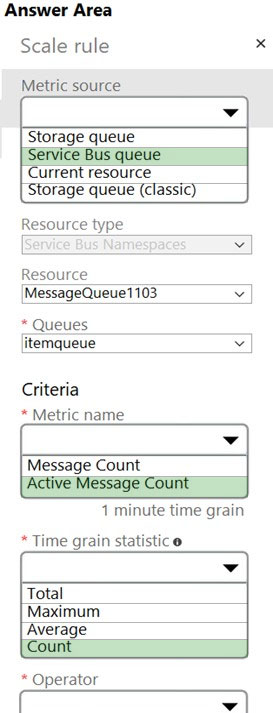

Question:82

You are developing a back-end Azure App Service that scales based on the number of messages contained in a Service Bus queue.

A rule already exists to scale up the App Service when the average queue length of unprocessed and valid queue messages is greater than 1000.

You need to add a new rule that will continuously scale down the App Service as long as the scale up condition is not met.

How should you configure the Scale rule? To answer, select the appropriate options in the answer area.

NOTE: Each correct selection is worth one point.

Hot Area:

Box 1: Service bus queue -

You are developing a back-end Azure App Service that scales based on the number of messages contained in a Service Bus queue.

Box 2: ActiveMessage Count -

ActiveMessageCount: Messages in the queue or subscription that are in the active state and ready for delivery.

Box 3: Count -

Box 4: Less than or equal to -

You need to add a new rule that will continuously scale down the App Service as long as the scale up condition is not met.

Box 5: Decrease count by

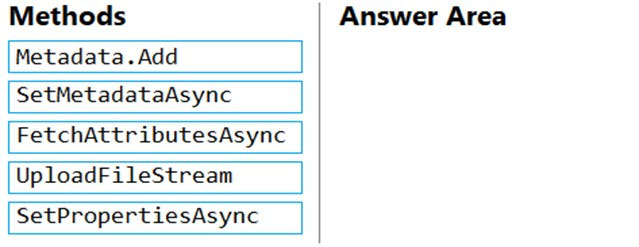

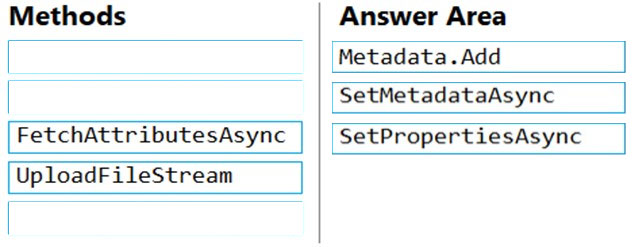

Question:83

You have an application that uses Azure Blob storage.

You need to update the metadata of the blobs.

Which three methods should you use to develop the solution? To answer, move the appropriate methods from the list of methods to the answer area and arrange them in the correct order.

Select and Place:

Answer:

Metadata.Add example:

// Add metadata to the dictionary by calling the Add method

metadata.Add("docType", "textDocuments");

SetMetadataAsync example:

// Set the blob's metadata.

await blob.SetMetadataAsync(metadata);

// Set the blob's properties.

await blob.SetPropertiesAsync();

Reference:

https://docs.microsoft.com/en-us/azure/storage/blobs/storage-blob-properties-metadata

Question:84

Note: This question is part of a series of questions that present the same scenario. Each question in the series contains a unique solution that might meet the stated goals. Some question sets might have more than one correct solution, while others might not have a correct solution.

After you answer a question in this section, you will NOT be able to return to it. As a result, these questions will not appear in the review screen.

You are developing an Azure solution to collect point-of-sale (POS) device data from 2,000 stores located throughout the world. A single device can produce

2 megabytes (MB) of data every 24 hours. Each store location has one to five devices that send data.

You must store the device data in Azure Blob storage. Device data must be correlated based on a device identifier. Additional stores are expected to open in the future.

You need to implement a solution to receive the device data.

Solution: Provision an Azure Event Grid. Configure the machine identifier as the partition key and enable capture.

Does the solution meet the goal?

- A. Yes

- B. No

https://docs.microsoft.com/en-us/azure/event-grid/compare-messaging-services

Question:85

You develop Azure solutions.

A .NET application needs to receive a message each time an Azure virtual machine finishes processing data. The messages must NOT persist after being processed by the receiving application.

You need to implement the .NET object that will receive the messages.

Which object should you use?

- A. QueueClient

- B. SubscriptionClient

- C. TopicClient

- D. CloudQueueClient

Incorrect Answers:

B, C: In contrast to queues, topics and subscriptions provide a one-to-many form of communication in a publish and subscribe pattern. It's useful for scaling to large numbers of recipients.

Reference:

https://docs.microsoft.com/en-us/azure/service-bus-messaging/service-bus-queues-topics-subscriptions

Question:86

You are maintaining an existing application that uses an Azure Blob GPv1 Premium storage account. Data older than three months is rarely used.

Data newer than three months must be available immediately. Data older than a year must be saved but does not need to be available immediately.

You need to configure the account to support a lifecycle management rule that moves blob data to archive storage for data not modified in the last year.

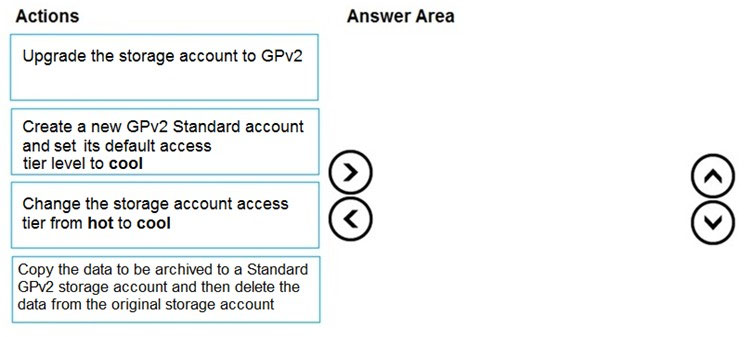

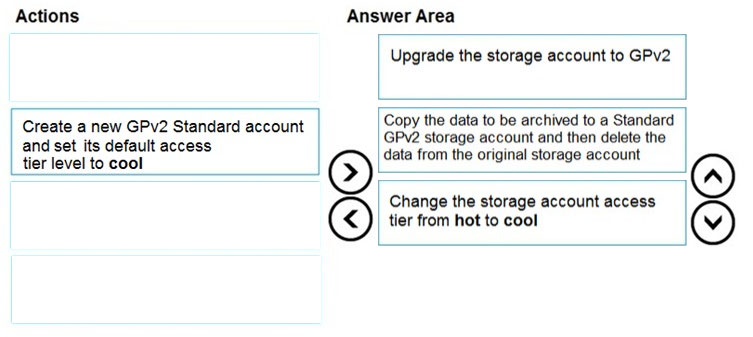

Which three actions should you perform in sequence? To answer, move the appropriate actions from the list of actions to the answer area and arrange them in the correct order.

Select and Place:

Step 1: Upgrade the storage account to GPv2

Object storage data tiering between hot, cool, and archive is supported in Blob Storage and General Purpose v2 (GPv2) accounts. General Purpose v1 (GPv1) accounts don't support tiering.

You can easily convert your existing GPv1 or Blob Storage accounts to GPv2 accounts through the Azure portal.

Step 2: Copy the data to be archived to a Standard GPv2 storage account and then delete the data from the original storage account

Step 3: Change the storage account access tier from hot to cool

Note: Hot - Optimized for storing data that is accessed frequently.

Cool - Optimized for storing data that is infrequently accessed and stored for at least 30 days.

Archive - Optimized for storing data that is rarely accessed and stored for at least 180 days with flexible latency requirements, on the order of hours.

Only the hot and cool access tiers can be set at the account level. The archive access tier can only be set at the blob level.

https://docs.microsoft.com/en-us/azure/storage/blobs/storage-blob-storage-tiers

Question:87

You develop Azure solutions.

You must connect to a No-SQL globally-distributed database by using the .NET API.

You need to create an object to configure and execute requests in the database.

Which code segment should you use?

- A. new Container(EndpointUri, PrimaryKey);

- B. new Database(EndpointUri, PrimaryKey);

- C. new CosmosClient(EndpointUri, PrimaryKey);

// Create a new instance of the Cosmos Client

this.cosmosClient = new CosmosClient(EndpointUri, PrimaryKey)

//ADD THIS PART TO YOUR CODE

await this.CreateDatabaseAsync();

Reference:

https://docs.microsoft.com/en-us/azure/cosmos-db/sql-api-get-started

Question:88

You have an existing Azure storage account that stores large volumes of data across multiple containers.

You need to copy all data from the existing storage account to a new storage account. The copy process must meet the following requirements:

✑ Automate data movement.

✑ Minimize user input required to perform the operation.

✑ Ensure that the data movement process is recoverable.

What should you use?

- A. AzCopy

- B. Azure Storage Explorer

- C. Azure portal

- D. .NET Storage Client Library

The copy operation is synchronous so when the command returns, that indicates that all files have been copied.

Reference:

https://docs.microsoft.com/en-us/azure/storage/common/storage-use-azcopy-blobs-copy

Question:89

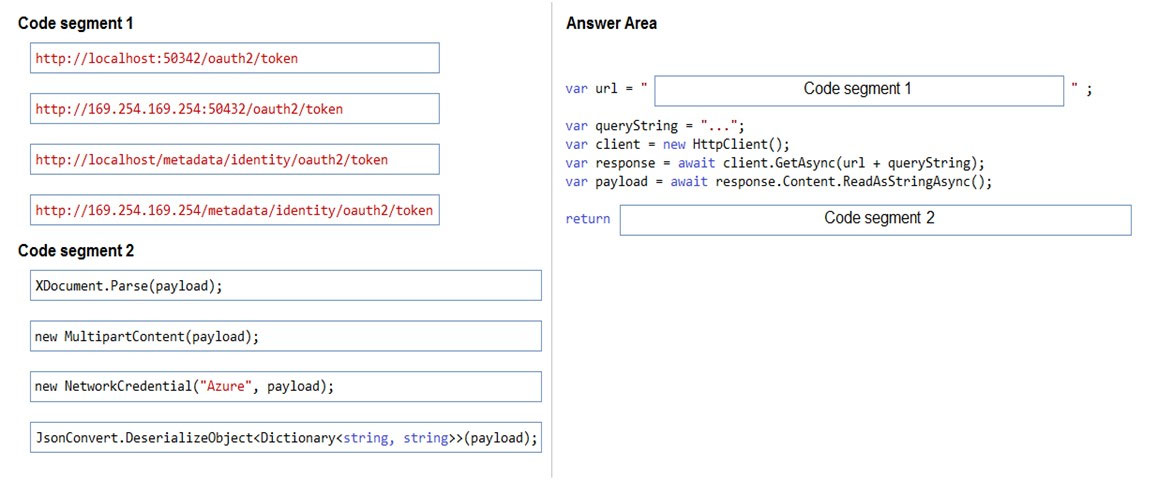

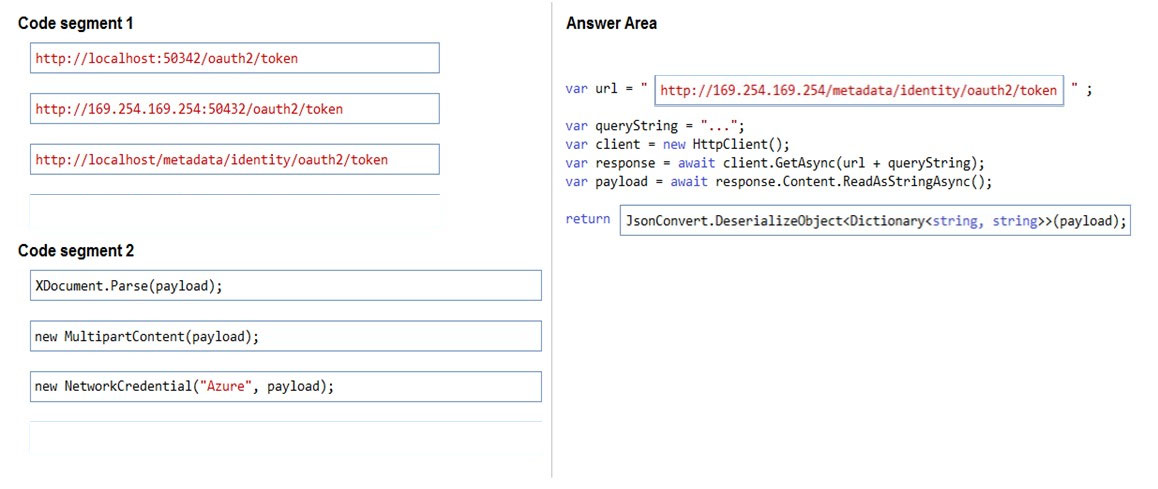

You are developing a web service that will run on Azure virtual machines that use Azure Storage. You configure all virtual machines to use managed identities.

You have the following requirements:

✑ Secret-based authentication mechanisms are not permitted for accessing an Azure Storage account.

✑ Must use only Azure Instance Metadata Service endpoints.

You need to write code to retrieve an access token to access Azure Storage. To answer, drag the appropriate code segments to the correct locations. Each code segment may be used once or not at all. You may need to drag the split bar between panes or scroll to view content.

NOTE: Each correct selection is worth one point.

Select and Place:

Azure Instance Metadata Service endpoints "/oauth2/token"

Box 1: http://169.254.169.254/metadata/identity/oauth2/token

Sample request using the Azure Instance Metadata Service (IMDS) endpoint (recommended):

GET 'http://169.254.169.254/metadata/identity/oauth2/token?api-version=2018-02-01&resource=https://management.azure.com/' HTTP/1.1 Metadata: true

Box 2: JsonConvert.DeserializeObject<Dictionary<string,string>>(payload);

Deserialized token response; returning access code.

Reference:

https://docs.microsoft.com/en-us/azure/active-directory/managed-identities-azure-resources/how-to-use-vm-token https://docs.microsoft.com/en-us/azure/service-fabric/how-to-managed-identity-service-fabric-app-code

Question:90

You are developing a new page for a website that uses Azure Cosmos DB for data storage. The feature uses documents that have the following format:

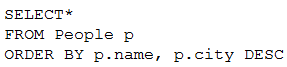

You must display data for the new page in a specific order. You create the following query for the page:

You need to configure a Cosmos DB policy to support the query.

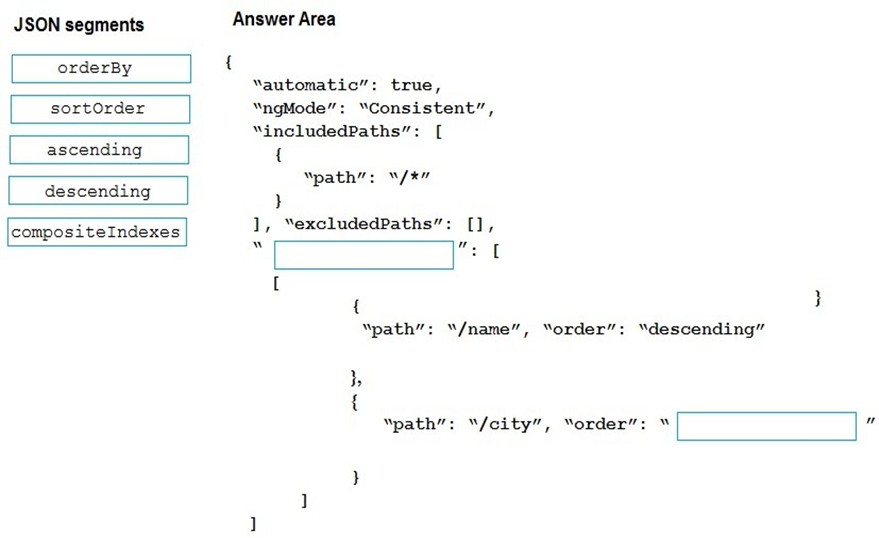

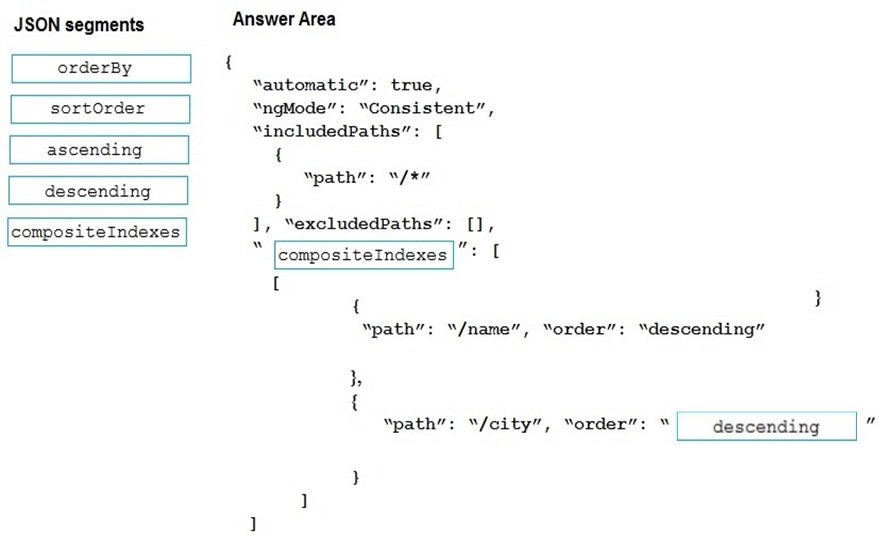

How should you configure the policy? To answer, drag the appropriate JSON segments to the correct locations. Each JSON segment may be used once, more than once, or not at all. You may need to drag the split bar between panes or scroll to view content.

NOTE: Each correct selection is worth one point.

Select and Place:

Box 1: compositeIndexes -

You can order by multiple properties. A query that orders by multiple properties requires a composite index.

Box 2: descending -

Example: Composite index defined for (name ASC, age ASC):

It is optional to specify the order. If not specified, the order is ascending.

{

"automatic":true,

"indexingMode":"Consistent",

"includedPaths":[

{

"path":"/*"

}

],

"excludedPaths":[],

"compositeIndexes":[

[

{

"path":"/name",

},

{

"path":"/age",

}

]

]

}